Projects

Structured Data Projects

AdTracking Fraud Detection (Kaggle)

I performed data preprocessing, feature engineering and machine learning algorithms to predict fraudulent advertisement click and achieve top 15% result on Kaggle Private leaderboard. Competition link

Dataset type: structured timeseries data of 180+ milion records timeseries data. Labels are imbalanced: only 0.2% of the dataset is made up of fradulent clicks.

Software, libraries and models:

- Python, Pandas, Sklearn, Matplotlib, Pytorch (Fastai)

- XGBoost, Random Forest, Neural Network with category embeddings

Google Timeline Location Analysis

I took a deep dive and performed intensive data analysis and visualization on unlabeled GPS dataset collected by Google Timeline application. From there I cleaned out inaccurate data features, transformed raw data into analysis-ready data and applied few clustering algorithms to identify clusters that can review user's habit and common routine.

This project is featured on TechTarget analytics blog

Dataset type: geographic dataset of 600K+ coordinates and metadata.

Software, libraries and models:

- Python, Pandas, Sklearn; Seaborn and Folium for interactive visualization

- K-means, Meanshift

Future Sales Prediction (Kaggle)

I built and finetuned one machine learning model to predict sales for every product and store in the next month. The final model (Light Gradient Boosting Machine) reached 7th position (out of 200) on Kaggle Public Leaderboard as of 4/16/2018. Competition link

Dataset type: structured timeseries data of 6 million records from one of the largest Russian software firms: 1C company.

Software, libraries and models:

- Python, Pandas, Sklearn, Matplotlib

- Light GBM, Random Forest

Computer Vision Projects

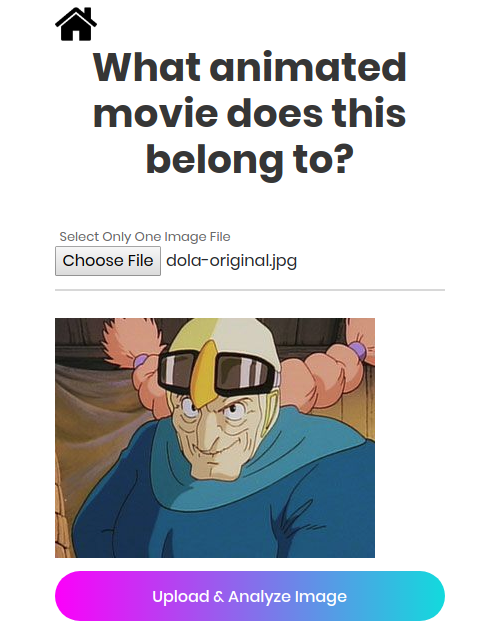

Animated Movie Classification + live demo

I built a deep learning model to identify a scene/character from 10 animated classic movies (4 from Ghibli Studios, 6 from Disney) and published it as a web application that you can test here.

I also write a blog to show how I built it and how I debug my model using Grad-CAM visualization technique.

Dataset type: data collected from movie frames and internet

Software, libraries and models:

- For image preprocessing: skimage

- For training and visualization: Fastai v1 (Pytorch) using Resnet50 architecture, Matplotlib

- For deployment: Docker, Starlette.io framework & Uvicorn ASGI server, hosted by

Amazon BeanstalkRender

Natural Language Processing Projects

End-to-end deep learning for tabular and text

I create a data module to handle text data and tabular data (metadata) and built a joint deep learning architecture which combines a recurrent neural network model (AWD-LSTM) and multi-layer perceptron with categorical embedding in order to train both types of data efficiently.

You can follow the discussion on fastai forum

This architecture is adopted by Reply.ai to improve customer experience with better ticket classification. Read Reply.ai TowardDataScience's article here

Dataset type: model is tested on Kaggle PetFinder and Kaggle Mercari Price datasets

Sofware, libraries and models:

- Python, Pytorch (Fastai)

- AWD-LSTM, ULMFiT, Neural network with category embeddings

"Machine Learning from Scratch" project

1. A quick summary:

I didn't know much about machine learning until the last semester of my undergrad when I took a data mining class as an elective. Since then I have always been fascinated by how versatile machine learning is when it comes to solving problems. Since then I have taken several online machine learning courses such as Andrew Ng's famous ML course or Jeremy Howard and Rachel Thomas's practical course.

Using all the knowledge I have learned, I decide to rebuild some common machine learning algorithms using only Python and limited Numpy functions and compared them to existing models from popular machine learning framework such as Sklearn or Pytorch.

My goal is to fully understand the algorithms and libraries I have been used for Kaggle competitions or other personal projects. So far this project has helped me tremendously in gaining insight on how these models work, what is really happening at each iteration, how and why models need these parameters ... and even more basic stuff such as how data comes in and out of a model or what matrix multiplication looks like in code.

Some of the code might not be refactored well, but my goal is not to optimize these algorithms or to push them to production; there are already so many out there. I rather have their inner workings laid out in a transparent way so I can visit later.

2. List of algorithms implemented:

(Links to jupyter notebooks are included)

- Linear Regression with weight decay (L2 regularization)

- Logistic Regression with weight decay (L2 regularization)

- Random Forest with Permutation Feature Importances (inspired by Terence Parr's blog post)

- K Nearest Neighbors: supervised and unsupervised

-

(Deep) Neural Network for classification

- Stochastic Gradient Descent

- More hidden layers can be added dynamically

- Variety of activation functions + gradients (Sigmoid, Softmax, ReLU ...) customized for each hidden layer

- Weight decay

- Dropout

- Dynamic learning rate optimizer (momentum, RMSProp and Adam)

- TODO: Batchnorm